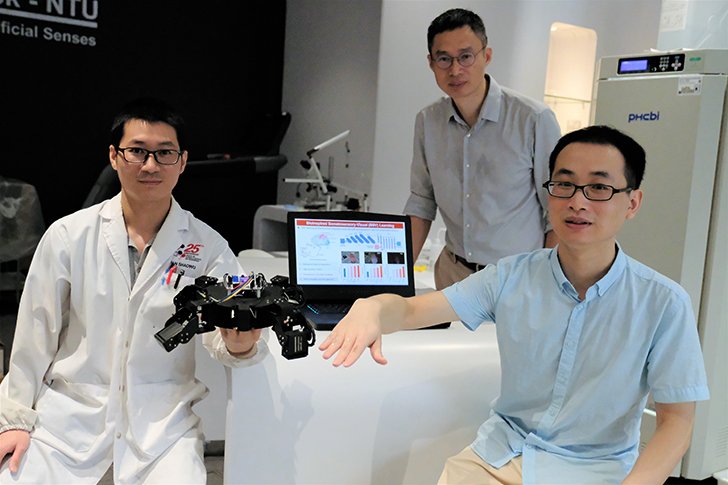

Image credit- NTU

Scientists from Nanyang Technological University, Singapore (NTU Singapore) have developed an Artificial Intelligence (AI) system that recognises hand gestures by combining skin-like electronics with computer vision.

The recognition of human hand gestures by AI systems has been a valuable development over the last decade and has been adopted in high-precision surgical robots, health monitoring equipment and in gaming systems.

The NTU team has created a 'bioinspired' data fusion system that uses skin-like stretchable strain sensors made from single-walled carbon nanotubes, and an AI approach that resembles the way that the skin senses and vision are handled together in the brain.

The NTU scientists developed their bio-inspired AI system by combining three neural network approaches in one system: they used a 'convolutional neural network', which is a machine learning method for early visual processing, a multilayer neural network for early somatosensory information processing, and a 'sparse neural network' to 'fuse' the visual and somatosensory information together.

The result is a system that can recognise human gestures more accurately and efficiently than existing methods.

The NTU research team is now looking to build a virtual reality (VR) and augmented reality (AR) system based on the AI system developed, for use in areas where high-precision recognition and control are desired, such as entertainment technologies and rehabilitation in the home.